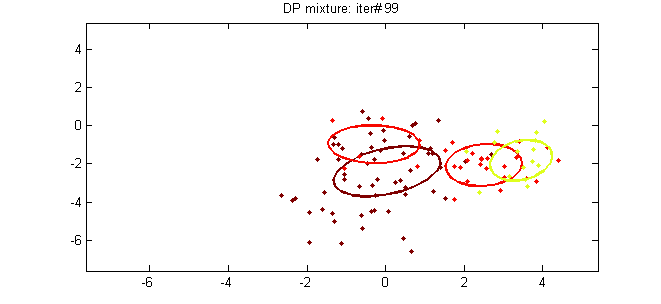

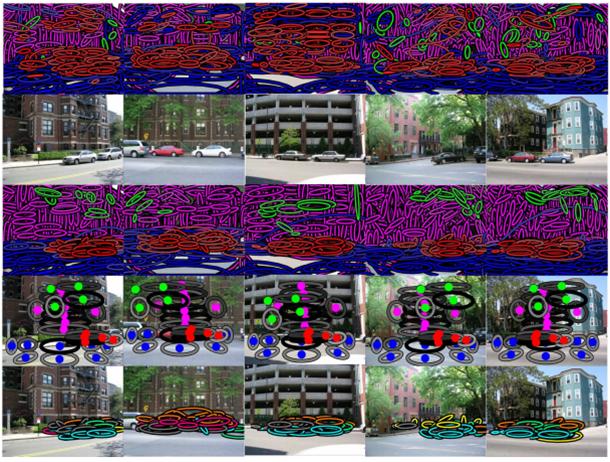

Clustering documents and gaussian data with...

Clustering with Dirichlet Process Mixture Model...

a guide for academic researchers –...

FBI Warns of AI Voice Scams

Why Apple Intelligence Might Fall Short...

Vibe Coding, Vibe Checking, and Vibe...

Microsoft launched the Phi-4 model with...

A Farewell to the Bias-Variance Tradeoff?...

Optimization Algorithms for Machine Learning

Welcome to the Personal Artificial Intelligence...

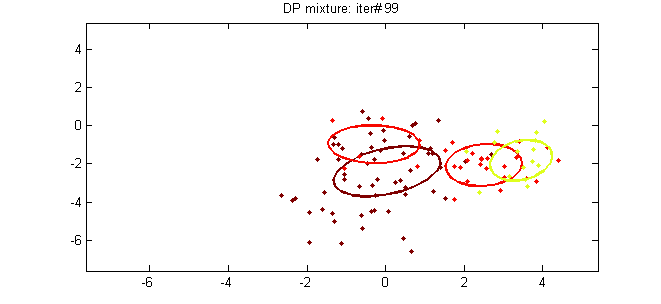

Clustering documents and gaussian data with...

June 30, 2014 Vasilis Vryniotis . No comments This article is the fifth part of the tutorial on Clustering with

READ MORE

Clustering with Dirichlet Process Mixture Model...

July 7, 2014 Vasilis Vryniotis . 1 Comment In the previous articles we discussed in detail the Dirichlet Process Mixture

READ MORE

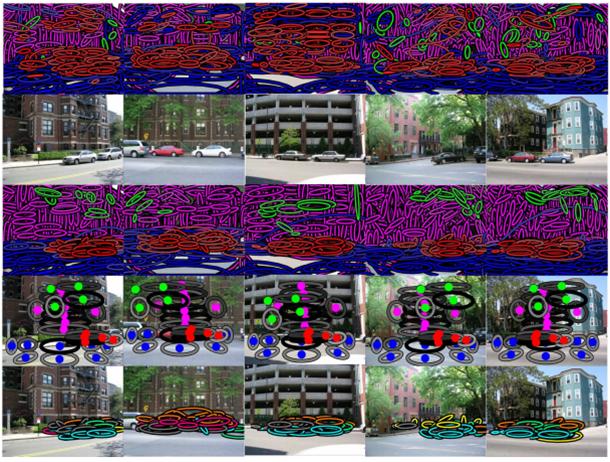

a guide for academic researchers –...

🔘 Paper page: arxiv.org/abs/2108.02497?fbclid=IwAR3MNl5qa5ysUoNlkEQE4hSXNGoEGwtCClMNcJDXH1etKHNcCweDRTXW_tY Abstract «This document gives a concise outline of some of the common mistakes that occur when

READ MORE

FBI Warns of AI Voice Scams

FBI Warns of AI Voice Scams The FBI warns of AI voice scams, a chilling alert that highlights how artificial

READ MORE

Why Apple Intelligence Might Fall Short...

As the tech world buzzes with the unveiling of Apple Intelligence, expectations are soaring. The leap from iPhone to AI-Phone

READ MORE

Vibe Coding, Vibe Checking, and Vibe...

For the past decade and a half, I’ve been exploring the intersection of technology, education, and design as a professor

READ MORE

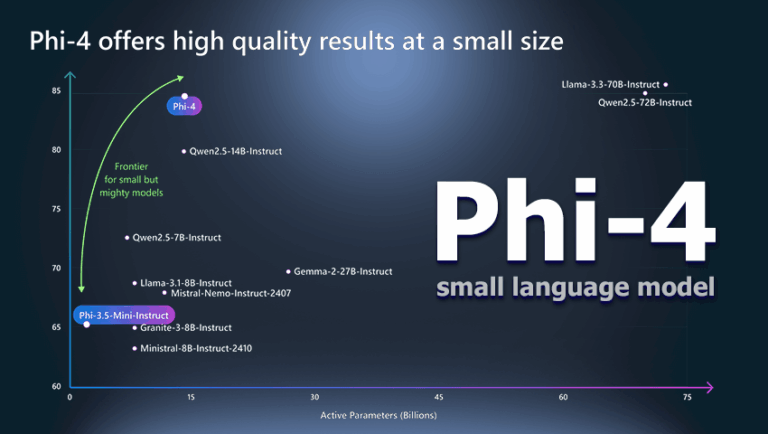

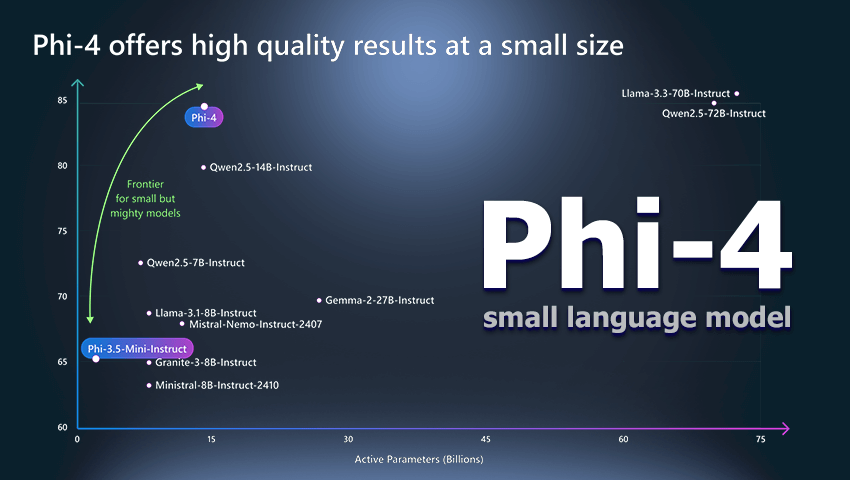

Microsoft launched the Phi-4 model with...

Microsoft has introduced the generative AI model Phi-4 with fully open weights on the Hugging Face platform. Since its presentation

READ MORE

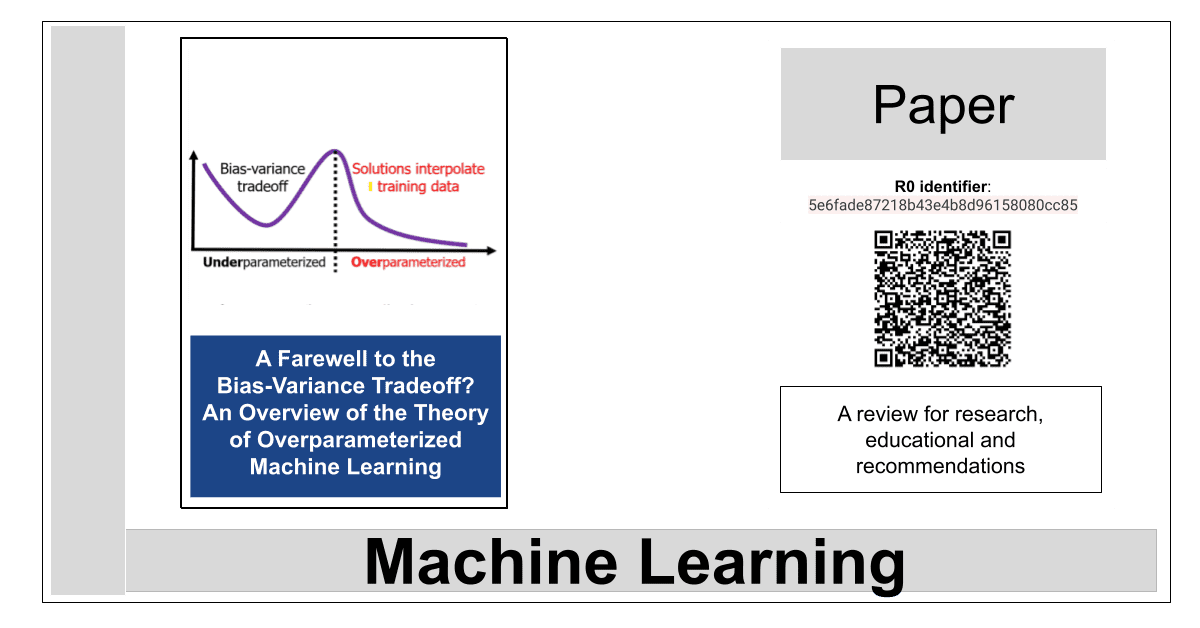

A Farewell to the Bias-Variance Tradeoff?...

Abstract «The rapid recent progress in machine learning (ML) has raised a number of scientific questions that challenge the longstanding

READ MORE

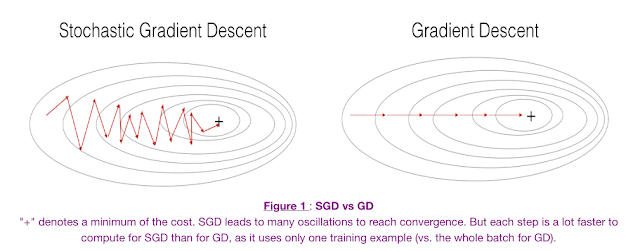

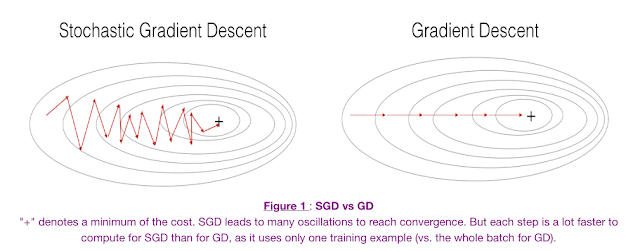

Optimization Algorithms for Machine Learning

I have been learning through Andrew Ng’s Deep Learning specialization on Coursera. I have completed the 1st of the 5

READ MORE

Welcome to the Personal Artificial Intelligence...

Like it or not, technology is everywhere. We have long ago passed a time where computers were relegated to men

READ MORE