Artificial Intelligence (AI) continues to transform industries with its speed, relevance, and accuracy. However, despite impressive capabilities, AI systems often face a critical challenge known as the AI reliability gap—the discrepancy between AI’s theoretical potential and its real-world performance. This gap manifests in unpredictable behavior, biased decisions, and errors that can have significant consequences, from misinformation in customer service to flawed medical diagnoses.

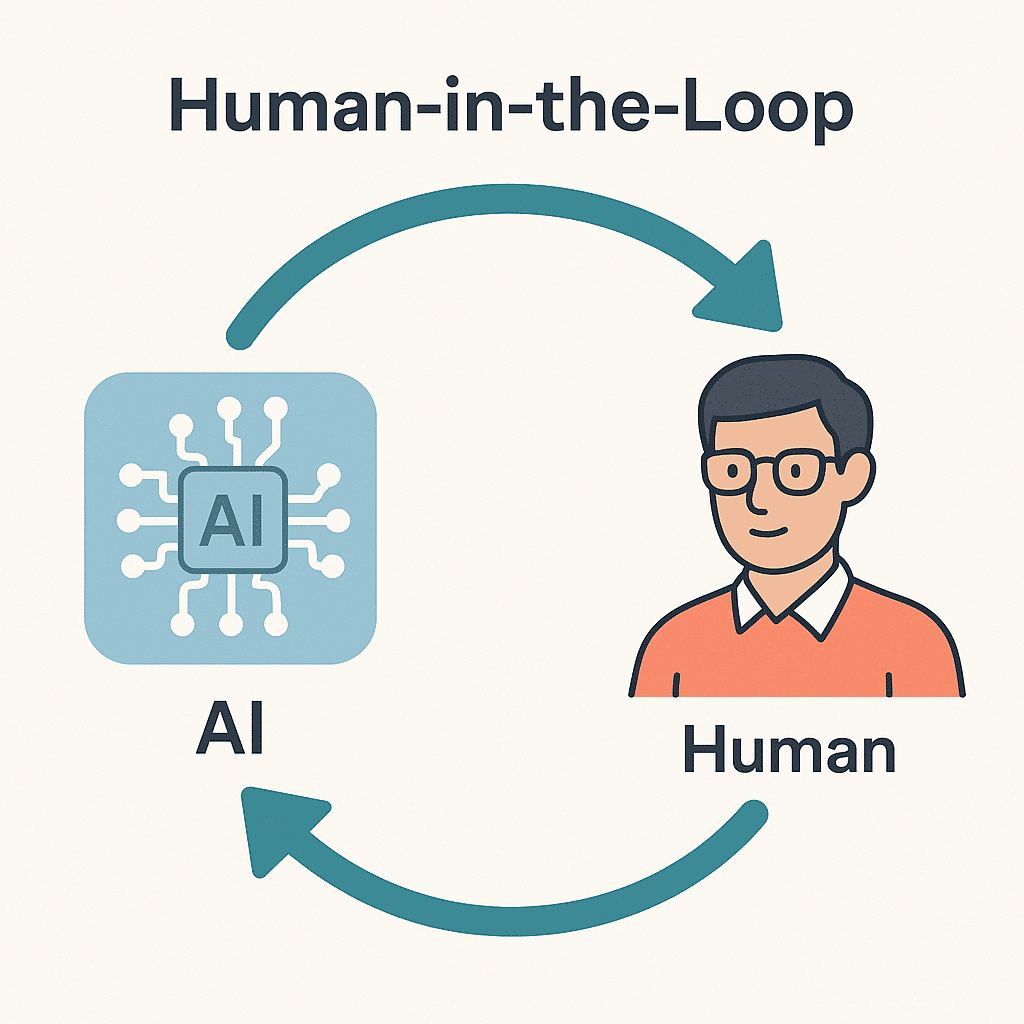

To address these challenges, Human-in-the-Loop (HITL) systems have emerged as a vital approach. HITL integrates human intuition, oversight, and expertise into AI evaluation and training, ensuring that AI models are reliable, fair, and aligned with real-world complexities. This article explores the design of effective HITL systems, their importance in closing the AI reliability gap, and best practices informed by current trends and success stories.

Understanding the AI Reliability Gap and the Role of Humans

AI systems, despite their advanced algorithms, are not infallible. Real-world examples:

| Incident | Error Type | Potential HITL Intervention |

|---|---|---|

| Canadian airline’s AI chatbot gave costly misinformation | Misinformation / Incorrect Response | Human review of chatbot responses during critical queries could catch and correct errors before they impact customers. |

| AI recruiting tool discriminated based on age | Bias / Discrimination | Regular audits and human oversight in screening decisions can identify and address biased patterns in AI recommendations. |

| ChatGPT hallucinated fictitious court cases | Fabrication / Hallucination | Human experts verifying AI-generated legal content can prevent the use of false information in critical documents. |

| COVID-19 prediction models failed to detect the virus accurately | Prediction Error / Inaccuracy | Continuous human monitoring and validation of model outputs can help recalibrate predictions and flag anomalies early. |

These incidents underscore that AI alone cannot guarantee flawless outcomes. The reliability gap arises because AI models often lack transparency, contextual understanding, and the ability to handle edge cases or ethical dilemmas without human intervention.

Humans bring critical judgment, domain knowledge, and ethical reasoning that machines currently cannot replicate fully. Incorporating human feedback throughout the AI lifecycle—from training data annotation to real-time evaluation—helps mitigate errors, reduce bias, and improve AI trustworthiness.

What Is Human-in-the-Loop (HITL) in AI?

Human-in-the-Loop refers to systems where human input is actively integrated into AI processes to guide, correct, and enhance model behavior. HITL can involve:

- Validating and refining AI-generated predictions.

- Reviewing model decisions for fairness and bias.

- Handling ambiguous or complex scenarios.

- Providing qualitative user feedback to improve usability.

This creates a continuous feedback loop where AI learns from human expertise, resulting in models that better reflect real-world needs and ethical standards.

Key Strategies for Designing Effective HITL Systems

Designing a robust HITL system requires balancing automation with human oversight to maximize efficiency without sacrificing quality.

Latest Trends in HITL and AI Evaluation

- Multimodal AI Models: Modern AI systems now process text, images, and audio, requiring HITL systems to adapt to diverse data types.

- Transparency and Explainability: Increasing demand for AI systems to explain decisions fosters trust and accountability, a key focus in HITL design.

- Real-time Human Feedback Integration: Emerging platforms support seamless human input during AI operation, enabling dynamic correction and learning.

- AI Superagency: The future workplace envisions AI augmenting human decision-making rather than replacing it, emphasizing collaborative HITL frameworks.

- Continuous Monitoring and Model Drift Detection: HITL systems are critical for ongoing evaluation to detect and correct model degradation over time.

Conclusion

The AI reliability gap highlights the indispensable role of humans in AI development and deployment. Effective Human-in-the-Loop systems create a symbiotic partnership where human intelligence complements artificial intelligence, resulting in more reliable, fair, and ethical AI solutions.